Abstracts of Keynote Lectures

- Matthias Aschenbrenner.

The model theory of transseries

Abstract:

Transseries were introduced in the 1980s by the analyst Écalle in his work on Hilbert's 16th Problem,

and also, independently, by the model theorists Dahn and Göring in their work around Tarski's problem

on real exponentiation. They naturally arise as asymptotic expansions for germs of functions definable

in certain o-minimal structures. Since the late 1990s, van den Dries and myself, later joined by

van der Hoeven, have pursued a program to understand the model theoretic aspects of this intricate but

fascinating mathematical object. In last few months we were able to make a significant step forward,

and established a quantifier elimination theorem for the differential field of transseries in a natural language.

My goal for this talk is to introduce transseries and to explain our recent work.

- Manuel Bodirsky.

Reconstructing countably categorical structures from their polymorphism clones

Abstract:

Two countably categorical countable structures have isomorphic

topological automorphism groups if and only if they are first-order bi-interpretable, by

a theorem of Ahlbrandt and Ziegler. For surprisingly many structures we can

replace the topological automorphism group by the (abstract) automorphism group and the

statement remains valid.

In this talk I will discuss to which degree we can recover a countably categorical structure

from its endomorphism monoid, or its polymorphism clone, instead of its automorphism group.

Two countably categorical countable structures have isomorphic topological

clones if and only if they are primitive positive bi-interpretable. We suspect that also here,

we can typically replace the topological clone by the (abstract) clone and the statement remains valid,

and show this for the situation where one of the structures is the Rado graph.

Joint work with Michael Pinsker and Andras Pongracz

- Stefania Centrone.

Proofs without detours: a retrospective perspective

Abstract:

I will present and discuss some interesting aspects of Bernard Bolzano's reflections on the notion of "rigorous proof".

- Victoria Gitman.

Choice schemes for Kelley-Morse set theory

Abstract:

The defining feature of the second-order Kelley-Morse (KM) set theory is that its class comprehension scheme includes

all second-order assertions. The full choice scheme includes, for every second-order formula $\phi$, the assertion that

if for every set $x$, there is a class $Y$ such that $\phi(x,Y)$ holds, then there is a single class $Z$ that has among

its slices a witnessing class for every $x$. The choice scheme can be stratified by formula complexity and has obvious

set-sized variants. Choice schemes for KM were first studied by Mostowski and Marek. They have been considered in the

context of nonstandard set theory and more recently in analyzing class forcing extensions. I will show that even the

weakest choice scheme, namely choice for omega and formulas with no second-order quantifiers, is already independent of KM.

This provides an interesting contrast with the situation in second-order arithmetic, where $Z_2$, the analogue of KM,

proves all instances of $\Sigma^1_2$ choice, but the choice scheme may fail at the $\Sigma^1_3$ level. I will also show

that the full choice scheme does not follow from the set-sized version. Finally, I will argue that KM+, which is KM

together with the choice scheme, is a more robust theory than KM because it is preserved by ultrapowers of the universe

and proves that second-order complexity classes are closed under first-order quantification, while it is consistent that

ultrapowers of KM models fail to even satisfy KM and that in a model of KM, second-order complexity classes can fail to

be closed under even bounded first-order quantification. This is joint work with Joel David Hamkins and Thomas Johnstone.

- Fairouz Kamareddine.

Computerising mathematical text, debugging proof assistants and object versus meta level

Abstract:

Mathematical texts can be computerised in many ways that capture differing

amounts of the mathematical meaning. At one end, there is document imag-

ing, which captures the arrangement of black marks on paper, while at the other

end there are proof assistants (e.g., Mizar, Isabelle, Coq, etc.), which capture the

full mathematical meaning and have proofs expressed in a formal foundation of

mathematics. In between, there are computer typesetting systems (e.g., LATEX and

Presentation MathML) and semantically oriented systems (e.g., Content MathML,

OpenMath, OMDoc, etc.). In this talk we advocate a style of computerisation

of mathematical texts which is flexible enough to connect the different approaches

to computerisation, which allows various degrees of formalisation, and which is

compatible with different logical frameworks (e.g., set theory, category theory,

type theory, etc.) and proof systems. The basic idea is to allow a man-machine

collaboration which weaves human input with machine computation at every step

in the way. We propose that the huge step from informal mathematics to fully

formalised mathematics be divided into smaller steps, each of which is a fully

developed method in which human input is minimal. We also propose a method

based on constraint generation and solving to debug proof assistants written in

SML and reflect on the need and limitation of mixing the object and metalevel.

- Anca Muscholl.

Distributed synthesis

Abstract:

Synthesis is about constructing systems that behave correctly w.r.t. to a given specification.

Synthesizing distributed systems is an attractive goal, since such systems are notoriously difficult to get right.

Unfortunately, there are very few known decidable frameworks for distributed synthesis.

We will discuss one such framework, stated in the rendez-vous model of Zielonka automata. In this setting,

synthesis is decidable for all omega-regular local specifications, under the restriction that the communication

graph of the system is acyclic.

- Olivier Roy.

The logic of obligations as weakest permissions

Abstract:

This will be a talk about deontic logic. This is the logic of

obligations and permissions. I will introduce a new understanding of

these deontic modals. I call it the logic of "obligation as weakest

permission." In this logic obligations and permissions provide,

respectively, necessary and sufficient conditions for an action to be

legal. I will argue for the philosophical plausibility of this view,

present its non-normal modal logic, as well as a cut-free hypersequent

calculus for it, and show how it can be extended and applied to

practical norms in decision and game theory. This is join work with

Albert Anglberger (LMU Munich) and Norbert Gratzl (LMU Munich)

- Daniel Turetsky.

Algorithmic Randomness and the Turing Degrees

Abstract:

Algorithmic randomness uses the tools of computability theory to analyze the question,

"When is an infinite binary sequence random?". Several different definitions of a random sequence are studied,

with the most common being Martin-Löf randomness.

Randomness has a rich interaction with the Turing degrees of more classical computability theory,

in particular with the c.e. degrees and the PA degrees. For example, if a random cannot compute

$0'$ (or equivalently, cannot compute a PA degree), then every c.e. set which it can compute is

$K$-trivial, which means that randomness considers it useless as an oracle; despite being non-computable,

it has no derandomization power. The covering problem asks if this can be reversed: is every $K$-trivial computable

from a random that does not compute $0'$?

I will review the appropriate definitions and discuss the covering problem and similar questions.

I will also discuss the proof of the covering problem and how it touches on the interaction of algorithmic randomness and effective analysis.

Abstracts of PhD Colloquium Lectures

- Dominik Adolf.

The core model induction

Abstract:

The core model induction, originally introduced by Hugh Woodin,

is a versatile method to compute lower bounds for the consistency strength of extension of ZFC.

We hope to introduce the basic concepts and discuss some recent developmenmts.

- Marcos Cramer.

The Naproche system: Proof-checking mathematical texts in controlled natural language

Abstract:

We have developed a controlled natural language (CNL) – i.e. a subset of a natural language defined through a formal grammar –

for writing mathematical texts. The Naproche system can check the correctness of mathematical proofs written in this CNL.

In this talk we will highlight some interesting logical and linguistic problems that had to be solved in the development of

the Naproche CNL and the Naproche system: The system uses a formalism from the linguistic theory of presuppositions in order

to work with potentially undefined terms, and can handle dynamic quantification, including the phenomenon of implicit dynamic

function introduction, which had previously not been discussed in the literature.

- Tomer Kotek.

Definability of Combinatorial Functions

Abstract:

In recent years there has been growing interest in graph polynomials,

functions from graphs to polynomial rings which are invariant to isomorphism.

Graph polynomials encode information about the input graphs e.g. in their evaluations, coefficients, degree and roots.

Many researchers studied specific graph polynomials such as the chromatic polynomial, the Tutte polynomial,

the interlace polynomial or the matching polynomial. In this talk a unified logical framework for graph polynomials

will be presented. Using this framework, the definability of graph polynomials which count generalized colorings

will be compared with with that of graph polynomials defined as subset expansions. A method of showing non-definability

of graph polynomials using connection matrices will be discussed.

Applications of this method to non-definability proofs of graph classes will be presented.

- Davide Rinaldi.

Formal Topology, Information Systems and Finite Density

Abstract:

Scott introduced Information Systems to make available a simpler and constructive presentation of algebraic domains.

We propose here an alternative description, based on Sambin's Formal Topology, and we investigate the status of the

corresponding Kleene-Kreisel density theorem, stating that total ideals are dense in the finitely generated partial

ideals of a given type. By means of general tools in formal topology, we give a finite and constructive version of

it for non-flat domains.

Abstracts of Contributed Talks

- Geghard Bedrosian and Frederik Herzberg.

Ultraproduct construction of representative utility functions with infinite-dimensional domain

Abstract: This

paper applies model theory to macroeconomic theory. In mathematical

models of macroeconomic theory, the hypothesis of a "representative

agent'' is ubiquitous, but the search for a rigorous justification has

so far been unsuccessful and was ultimately abandoned until very

recently. Herzberg (2010) constructed a representative utility function

for finite-dimensional social decision problems, based on an bounded

ultrapower construction over the reals, with respect to the ultrafilter

induced by the underlying social choice function (via the

Kirman--Sondermann (1972) correspondence). However, since the decision

problems of macroeconomic theory are typically infinite-dimensional,

Herzberg's original result is insufficient for many applications. We

therefore generalise his result by allowing the social alternatives to

belong to a general reflexive Banach space $W$; in addition to known

results from convex analysis, our proof uses a nonstandard enlargement

of the superstructur eover $W\cup\mathbb{R}$, obtained by a bounded

ultrapower construction with respect to the Kirman--Sondermann

ultrafilter.

- Alexander Carstensen Block.

The Modal Logic of Symmetric Extensions

Abstract: Symmetric

forcing was first used by Paul Cohen to show the independence of the

Axiom of Choice from $\mathsf{ZF}$. It is a modification of the method of forcing, a

flexible set theoretic model construction assigning to a given

(countable transitive) model of $\mathsf{ZF}$ outer models, called generic

extensions, each of which is "generated" from a single new object. The

forcing method itself is unsuitable for establishing the independence of

the Axiom of Choice from $\mathsf{ZF}$, since every generic extension of a

$\mathsf{ZFC}$-model also satisfies the Axiom of Choice.

So instead Cohen used symmetric forcing, considering symmetric

submodels of generic extensions instead of actual generic extensions.

Symmetric submodels are obtained by deleting objects whose descriptions

(in terms of the so-called forcing language) are not sufficiently stable

under a certain group action. This process may remove well-orderings

of sets still present, thereby breaking the axiom of choice.

We refer to symmetric submodels of generic extensions in short as

symmetric extensions. A central property of symmetric extensions (and

generic extensions as well) is that truth in them can be expressed in

the ground model one starts with. More precisely for every first-order

sentence $\varphi$ in the language of set theory there is a first-order sentence

$\varphi^*$ in the language of set theory such that for any (countable

transitive) $\mathsf{ZF}$-model $M$ we have that $M \models \varphi^*$ iff for any symmetric

extension $N$ of $M$: $N$ satisfies $\varphi$.

Using this fact we can give a correspondence between formulas in the

basic modal language and first-order sentences in the language of set

theory as follows. A translation is a map assigning proposition letters

to sentences in the language of set theory. We can extend any

translation $T$ to an interpretation $\underline{T}$ assigning formulas in the basic

modal language to first-order sentences in the language of set theory by

recursively setting:

(1) $\underline{T}(p) := T(p)$ for any proposition letter $p$;

(2) $\underline{T}(\bot) := (0=1)$;

(3) $\underline{T}(A+B) := \underline{T}(A) + \underline{T}(B)$ for any logical connective $+$;

(4) $\underline{T}(\Box A) := [\underline{T}(A)]^*$

With this we can define the ZF-provable modal logic MLS of symmetric

forcing by setting $MLS := \{A \mid ZF$ proves $\underline{T}(A)$ for any translation $T\}$

This definition is analogous to the definitions of the corresponding

modal logic of forcing as introduced and investigated by Joel Hamkins

and Benedikt Löwe in [1]. Using an adaptation of their methods we show

that MLS = S4.2 and therefore that MLS coincides with the modal logic of

forcing.

References:

1. JOEL D. HAMKINS, BENEDIKT LÖWE, The modal logic of forcing.

Transactions of the American Mathematical Society, vol. 360 (2008), no.

4, pp. 1793-1817

- Vasco Brattka, Guido Gherardi and Rupert Hölzl.

Probabilistic computability and choice

Abstract: The

aim of this talk is to give an overview of the systematic investigation

we have carried out onto the field of randomized algorithms for real

numbers.

In particular, the notions of Las Vegas and probabilistic computability for infinite sequences are presented.

As case studies we analyze the problems of computing Nash equilibria and solutions to the intermediate value theorem.

See the arXiv version for more details.

- Yong Cheng, Sy-David Friedman and Joel David Hamkins.

Large cardinals need not be large in HOD

Abstract:

We

prove that large cardinals need not generally exhibit their large

cardinal nature in HOD. For example, a supercompact cardinal \kappa need

not be weakly compact in HOD, and there can be a proper class of

supercompact cardinals in V, none of them weakly compact in HOD, with no

supercompact cardinals in HOD. Similar results hold for many other

types of large cardinals, such as measurable and strong cardinals.

- Merlin Carl.

Algorithmic Randomness for Infinitary Machines

Abstract: During

the last decade, a number of machine models of transfinite

computability have been proposed and studied, such as Infinite Time

Turing Machines, Infinite Time Register Machines and Ordinal Turing

Machines.

We consider algorithmic randomness for machine models of infinitary

computations. We show that a theorem of Sacks, according to which a real

$x$ is computable from a randomly chosen real with positive probability

iff it is recursive holds for many of these models, but is independent

from $ZFC$ for ordinal Turing machines. Furthermore, we define an

analogue of Martin-Löf-randomness for Infinite Time Register Machines

and show that some classical results like van Lambalgen's theorem

continue to hold.

- Bernhard Fisseni. What makes stories similar? – Some Empirical and Formal Results

Abstract: We

present a survey of the results and findings of the research project

*What makes stories similar?* funded by the John Templeton Foundation

from October 2011 to May 2015.

The research project *What makes stories similar?* funded by the

John Templeton Foundation from October 2011 to May 2015 and coordinated

by Benedikt Löwe as principal investigator aimed at providing a

discussion of similarity for narratives. Guided by its eponymous

question and based on Löwe's previous research on comparability and

(in)compatibility of formal frameworks, the project aimed to find out

whether there are structural (rather than presentational) properties

that contribute or even define story similarity, whether they can be

expressed in formal representation systems, and how such representations

can be empirically tested.

- Walter Hoering. A Formalism allowing Non-deterministic Strategies

Abstract:

Turing and Feferman have shown, how to interpret and use Gödel's incompleteness

theorem in a positive way - i.e. as a tool to construct progressions of recursively

enumerable theories. These theories work with constructive ordinals

and are either effectively realizable and recursively enumerable,

therefore Gödel-extendible, using as they do, only effective rules and

only a recursively enumerable part of the constructive ordinals, or they

reach beyond he effective, using some version of the omega-rule or a

non-trivial section of the constructive ordinals.

If one deals with these progressions, one can work within a conventional system or

abandon it fore another one, which may be stronger. This

unconditioned and permanent capacity for choice is beyond the capacities

of a conventional formal system. At this point we cannot but ask for

the difference between the capacities of a human being and the

possibilities of a conventional formal system. The answer is quite

simple: the difference lies in the freedom of man, in his capacity for

random choice. So we come to look for a new kind of formal system

featuring this capacity. We construct a new kind of formal system, the

non-deterministic systems which have non-determinism built in in a

presumably novel way. It seems to go beyond the recursively enumerable.

We call a system non-deterministic, if it can take a random input or

alternately also a deterministic input at the same place. Some of these

systems are capable of strengthening themselves indefinitely by dint of

their own strength, just as Baron Münchhausen pulled himself and the

horse upon which he was riding out of a pernicious swamp just by pulling

up his own tuft of hair. Therefore we propose the name of

Münchhausen-Systems for this kind of system. Another adventure of the

Baron was that, riding on a canon ball, he suddenly decided to change

direction by jumping onto another canon ball approaching from the

opposite direction. Also this story will have a counterpart here. Only

that the rather pedestrian analogue of the Baron's instantaneous

resolution, a random generator, will be used to create an infinite

sequence of natural numbers and thereby of systems.

Turing in his conception of a device, later to be called Turing

machine, dealt with linear computation, proceeding without branching:.

"There is only one way to go on, straight ahead".

Housewives and artisans know: If I do one thing, I will have to skip something

other. It is this kind of inescapable choice between many alternatives, which we model in our systems.

Our subject is computability, deducibility if free, non-determined

decisions are allowed to intervene an infinite number of times: "There

are always many directions to go on" In our Münchhausen Systems

(M-systems for short) we always face the alternative: to stay where we

are and to continue working in the present surrounding - or - to change

into a new environment.

If one is interested in evaluating sets like O, Kleene's

constructive ordinals, or B the set of recursive well-founded trees one

deals with positive inductive definitions I.e. with productive sets - to

which M-Systems can be applied.

- Mirjana Ilic and Branislav Boricic.

Is t really needed in formulating sequent calculi for relevant logics?

Abstract: The

first Gentzen calculi for distributive relevant logics were presented

in the late 1960's and early 1970's, when Dunn published Gentzen system

for positive relevant logic, and Minc formulated the Gentzen system

for positive relevant logic with necessity. In those calculi, an empty

left-hand side, in the left premise of the cut rule can lead to

irrelevance (the modal fallacy would be derivable, then).

There are two ways to prevent the inference of the modal fallacy in

those calculi. However in both of them, the constant t, which is not

the part of the standard language for relevant logics, is needed.

Giambrone and Brady also followed those ideas in formulating the

sequent calculi for RW+ and RW, confirming the need for t in those

logics, also. However, it has been shown recently, that it is possible

to obtain the cut--free sequent calculus for RW without the help of t.

We present the sequent calculus GRW of RW. The calculus GRW is with

right-handed sequents, based on unsigned formulae, unlike Brady's

calculus L'RW of RW, which is based on single-conclusion sequents with

signed formulae. The calculus GRW has a much neater presentation,

although the proof that it is cut--free is somewhat more complex.

- Franziska Jahnke.

Definable Henselian Valuations

Abstract: In

a henselian valued field, many arithmetic or algebraic questions can be

reduced, via the henselian valuation, to simpler questions about the

value group and the residue field. By the celebrated Ax-Kochen/Ershov

Principle, in fact, if the residue characteristic is 0, `everything' can

be so reduced:

the 1st-order theory of a henselian valued field (as a valued field)

is fully determined by the 1st-order theory of its value group (as an

ordered abelian group) and of its residue field (as a pure field).

Conversely, in many cases, a henselian valuation is so intrinsic to a

field that it is itself encoded in the arithmetic of the field, i.e.

its valuation ring is a 1st-order parameter-free definable subset of the

field (in the language of rings). In this talk, we investigate the

question when a henselian valued field admits such a non-trivial

0-definable henselian valuation.

Clearly, separably or real closed fields admit no non-trivial

definable valuations, and, by the work of Prestel and Ziegler, there are

further examples of henselian valued fields which do not admit a

0-definable non-trivial henselian valuation. We give conditions on the

residue field which ensure the existence of a parameter-free definiton.

Using recent work of Prestel, we deduce that in every case discussed in

the talk the formula can be found to be either existential-universal or

universal-existential.

The material presented includes joint work with both Jochen Koenigsmann and Arno Fehm.

- Yurii Khomskii.

Suslin proper forcing and regularity properties

Abstract:

Recently, in joint work with Vera Fischer and Sy Friedman, we

obtained a number of new results separating regularity properties on the

$\Delta^1_3$, $\Sigma^1_3$ and $\Delta^1_4$ levels of the projective hierarchy. In

this talk I will present some of the methods we used to obtain these

results, focusing on the concept of "Suslin/Suslin+ proper forcing", a

strengthening of Shelah's concept of proper forcing which only works for

easily definable forcing notions, and which was a crucial technical

ingredient in our proofs.

- Takayuki Kihara and Arno Pauly.

Point degree spectra of represented spaces

Abstract: We

introduce the point spectrum of a represented spaces as a substructure

of the Medvedev degrees. The point spectrum is closely linked to

isomorphism type of a space w.r.t. countably continuous maps, and via

this, also with the dimension. Through this new connection between

descriptive set theory and degree theory (as part of computability

theory) we can answer several open questions.

As a result on the way, we prove that any admissible represented

space with an effectively fiber-compact representation is already

computably metrizable.

- Giorgio Laguzzi.

Roslanowski and Spinas dichotomies

Abstract: (the talk is bases on joint works with Yurii Khomskii and Wolfgang Wohofsky)

We investigate two tree forcings for adding infinitely often equal

reals: the full splitting Miller forcing $\mathbb{FM}$, introduced by

Roslanowski in [1], and the infinitely often equal trees

forcing $\mathbb{IE}$, implicitly introduced by Spinas in [2].

We prove results about Marczewski-type regularity properties associated

with these forcings as well as dichotomy properties on

$\mathbf{\Delta}^1_2$ and $\mathbf{\Sigma}^1_2$ levels, with a

particular emphasis on a parallel with the Baire property. Furthermore,

we prove that our dichotomies hold for all projective sets in Solovay's

model, and that the use of an inaccessible is necessary for both.

Finally we also investigate the Marczewski-type ideals related to

$\mathbb{FM}$ and $\mathbb{IE}$, proving some results of orthogonality

with other tree forcings ideals.

[1] A. Roslanowski, On game ideals, Colloq. Math., 59(2):159-168, 1990.

[2] O. Spinas, Perfect set theorems, Fund. Math., 201(2):179-195, 2008.

- Javier Legris.

Existential Graphs as a Framework for Introducing Logical Constants and Logical Systems

Abstract: Charles

S. Peirce conceived his diagrammatic logic of the Existential Graphs

(EGs) as the best formulation of logical systems, according to his

iconic conception of logic and his idea of “exact logic”. Consequently

they constituted a general methodology for the mathematical treatment of

deduction based on notions from topology. Thus, the EGs open a fully

new direction in symbolic logic. Peirce formulated different

diagrammatic systems for the EGs that have been intensively studied and

expanded in the last years.

In this paper a new interpretation of Peirce’s EGs is introduced by

applying them as a framework for defining logical constants and logical

systems. This interpretation is coherent with the idea of the

‘productive ambiguity’ of diagrammatic representation implied in

Peirce’s own conception. Diagrams are open to different interpretations

because of their purely structural nature. Furthermore, this

interpretation attempts to build up a bridge between Peirce’s EGs and

the current perspective in logic of Structural Reasoning. According to

this, EGs could be properly understood as a kind of Structural

Reasoning, in the sense used by Peter Schroeder-Heister and originated

in Gerhard Gentzen’s ideas. Instead of using sequent style systems, the

EGs can be used in order to formulate general properties of deduction

and to define logical concepts. Instead of combinatorial analysis and

recursion (as in the case of sequent systems), EGs can be studied by

topology. In this sense, the EGs can be interpreted as an account of

logical structures. More specifically, the ‘scroll’ formulated by Peirce

in early formulations of the EGs will be used to express an

implication structure.

The main claims of the paper can be summarized as follows: (1)

diagrams can be used in two different senses: (a) as an intuitive way to

express the nature of deduction in terms of diagrams, (b) as a

mathematical tool to characterize logical constants of different logic

systems. (2) The theory for formulating logic systems evolves from a

coherent philosophical perspective, so that there is a clear

correspondence between logical theory (including a topological

framework) and philosophical theory (the iconic nature of deduction).

(3) The philosophical theory does not make deduction and logical

concepts dependent on ordinary language.

- Philipp Lücke.

Infinite fields with free automorphism groups

Abstract: Shelah

proved that a free group of uncountable rank is not isomorphic to the

automorphism group of a countable first-order structure. In contrast,

Just, Shelah and Thomas showed that it is consistent with the axioms of

set theory that there is a field of cardinality $\aleph_1$ whose

automorphism group is a free group of rank $2^{\aleph_1}$. Motivated by

this result, they ask whether there always is a field of cardinality

$\aleph_1$ whose automorphism group is a free group of rank greater than

$\aleph_1$.

I will present joint work with Saharon Shelah that shows that the

free group of rank $2^\kappa$ is isomorphic to the automorphism group of

a field of cardinality $\kappa$ whenever $\kappa$ is a cardinal

satisfying $\kappa=\kappa^{\aleph_0}$. The techniques developed in the

proof of this statement also show that the existence of a cardinal

$\kappa$ of uncountable cofinality with the property that there is no

field of cardinality $\kappa$ whose automorphism group is a free group

of rank greater than $\kappa$ implies the existence of large cardinals

in certain inner models.

- Roman Murawski.

Philosophy of logic and mathematics in Warsaw School (the case of Tarski and Mostowski)

Abstract: The aim of the talk is to analyze main trends and tendencies, main standpoints and

views in the philosophical reflection on mathematics and logic in Warsaw School between

the wars.

This was the time of intensive development of mathematics (Polish Mathematical

School) and of logic (Warsaw Logical School, Lvov-Warsaw Philosophical School) in

Poland. Hence a natural question arises whether this development of mathematics and

logic was accompanied by philosophical reflection on those disciplines, whether the researches

were founded on and stimulated by certain fixed philosophical presuppositions.

On the other hand philosophy of mathematics and logic is based on and uses certain

results of metamathematics, of the foundations of mathematics and of logic. Did logical

achievements influence the philosophical reflection?

The philosophical views of main logicians and mathematician (among them of Tarski

and Mostowski) will be presented and analyzed.

We come to the conclusion that Polish logicians and mathematicians being convinced

of the importance of philosophical problems and knowing quite well the current philosophical

trends treated logic and mathematics as autonomous disciplines independent

of philosophical reflection on them, independent of any philosophical presuppositions.

Therefore they sharply separated mathematical and logical research practice and philosophical

discussions concerning logic and mathematics. Philosophical views and opinions

were treated as “private” matter that should not influence the mathematical and metamathematical

investigations. On the contrary, in the latter all correct methods could and

should be used. This “methodological Platonism” enabled Polish logicians and mathematicians

to work in various areas without being preoccupied by philosophical dogmas.

In controversial cases, as for example in the case of the axiom of choice in set theory, their

attitude can be characterized as neutral – without making any philosophical declarations

they simply considered and studied various mathematical consequences of both accepting

and rejecting the controversial principles and investigated their role in mathematics.

- Hugo Nobrega.

Obtaining Weihrauch-complete functions and relations from sets of real numbers

Abstract: We report on research that connects the theory of Weihrauch degrees to the descriptive set theory of classes of functions.

Among other previously known results, functions $f_n$ for each

natural number $n$ and a relation $R$ had already been found which

characterize the Baire class $n$ functions and the class of functions

preserving $\mathbf{\Sigma}^0_2$ under preimages, respectively, in the

sense that a given function is in one of the mentioned classes if and

only if it is Weihrauch-reducible to the corresponding function or

relation.

In this talk, we show that functions characterizing the Baire class

$\alpha$ functions can be obtained by a general construction applied to

any Wadge-complete set for $\mathbf{\Sigma}^0_\alpha$, for all $\alpha

< \omega_1$, and show that the $f_n$ functions are special cases of

this construction.

Furthermore, we show that relations characterizing the classes of

functions with a certain type of partition property related to the

famous Jayne-Rogers theorem --- namely, for each $\alpha < \omega_1$,

the class of functions whose domain can be partitioned into countably

many $\mathbf{\Pi}^0_\alpha$ sets such that the function restricted to

each set in the partition is continuous --- can also be obtained by

another general construction applied to any Wadge-complete set for

$\mathbf{\Sigma}^0_\alpha$.

In particular, using the Jayne-Rogers theorem we show that $R$ can also be seen as a special case of this construction.

- Edoardo Rivello. Revision without Choice

Abstract: Revision

sequences were introduced in 1982 by Herzberger and Gupta

(independently) as a mathematical tool in developing what is since then

known as "The revision theory of Truth". Later, the same tool was

applied to other areas of logic and philosophy, like the theory of

definitions, the analysis of circular concepts, the theory of rational

belief, and others. In the original and informal presentation of the

theory, the revision sequences are ordinal-length sequences, namely

operations defined on the proper class of all ordinal numbers. However,

it is a common feature of most revision theories proposed in the

literature the fact that the same theory can be obtained by quantifying

over the class of all limit-length revision sequences, so providing a

formalisation of the theory in ZFC. Further, for a given theory, by an

application of the Lowenheim-Skolem theorem, McGee’s theorem establishes

an upper bound for the length of the revision sequences we need to

quantify over.

McGee's theorem and analogous or related results, are usually proved

by invoking some amount of the Axiom of Choice, in the form of the

Lowenheim-Skolem theorem or in another. In this talk I will present a

general method for reproving such results without using any form of the

Axiom of Choice. The method applies to a wide range of revision theories

encompassing Harzberger's, Gupta's and Belnap's original ones.

The talk is based on the following recent works (preprints available on academia.edu):

E. Rivello, Stability-Dependent Revision, submitted, 2013.

E. Rivello, Revision without the Axiom of Choice, submitted, 2014.

- Carsten Rösnick.

On Type-2 Complexity of Some Operators in Geometry, Topology and Analysis

Abstract: This

talk will be about my yet-to-be-finished PhD thesis. In my thesis I

analyze the computational complexity of some operators in geometry and

analysis based on earlier work of Ko and Friedman (1981/84, 1991), Chou

and Ko (1995, 2005), Brattka et al (1999, 2003), Labhalla et al (2001),

Zhao and Müller (2008), Kawamura and Cook (2011), and others. Examples

of operators include binary set union, intersection and projection, as

well as integration, differentiation, reciprocals $f \mapsto 1/f$ and

inverses $f \mapsto f^{-1}$. These, and more, operators are realized (in

the TTE-sense/"Weihrauch model") on oracle Turing machines where their

realizers are type-2 functionals, i.e. functionals $\phi$ of form

$\mathbb{N}^{\mathbb{N}} \times \mathbb{N} \to \mathbb{N}$.

In order to speak about

computations, we first have to introduce representations for spaces like

the class of all closed subsets of $\mathbb{R}^n$, or the class of continuous,

smooth or analytic functions. Usually, there is more than one "natural"

choice of representation, but as it will turn out, most of the ones we

will see are polytime equivalent---thus, from the perspective of

complexity, it does not matter which one we choose when devising the

complexity of operators. This in a sense even proves the complexity

bounds for operators to be 'robust'. However, obviously not all

representations turn out to be polytime equivalent: For example, there

are three quite natural representations in the realm of closed sets

which are not polytime equivalent; the first two because of the

difficulty to get global information out of local information, the

second two due to the need to add additional data.

Having settled

the question about some representations and their relations, we turn to

operators: the projection for sets, as well as integration and function

inversion for continuous functions. Projection proves to be polytime if

restricted to convex compact sets; while the lack of convexity links it

to $P$ vs $NP$ in the discrete world. Integration is known to map polytime

functions to polytime ones when restricted to analytic functions, but

over smooth functions it is not polytime---just like for continuous

functions. So, in a sense, smoothness does not help from a complexity

point of view. This gap between polytime over analytic and exptime over

smooth functions can be quantified in terms of the Gevrey level

(loosening the bounds on the growth of analytic functions depending on

said level)---which we will do. It turns out that the complexity of

integration depends exponentially on this level. We will also take a

look at a third operator, function inversion $f \mapsto f^{-1}$,

sufficient preconditions for this operator to be at least computable,

and the barriers to surpass due to its linkage to the existence of one

way functions and one way permutations.

- Sam Sanders.

Computing with infinitesimals

Abstract: See PDF document.

- Ralf Schindler, Sandra Uhlenbrock and W. Hugh Woodin.

Producing Iterable Models with Woodin Cardinals from Optimal Determinacy Hypotheses

Abstract: Projective

determinacy is the statement that for certain infinite games, where the

winning condition is projective, there is always a winning strategy for

one of the two players. It has many nice consequences which are not

decided by ZFC alone, e.g. that every projective set of reals is

Lebesgue measurable. An old so far unpublished result by W. Hugh Woodin

is that one can derive specific countable iterable models with Woodin

cardinals from this assumption. Work by Itay Neeman shows the converse

direction, i.e. projective determinacy is in fact equivalent to the

existence of such models. These results connect the areas of inner model

theory and descriptive set theory. We will give an overview of the

relevant topics in both fields and, if time allows, sketch a proof of

Woodin's result.

- Peter Schuster and Davide Rinaldi.

Eliminating Krull's Lemma for Horn Clauses

Abstract: In

the language of rings we consider implications from a finite

conjunction to a finite disjunction of atomic formulas. It is well-known

that one can characterise algebraically when such implication can be

proved for (commutative) rings and for integral domains, respectively.

By interpolation, we extend this characterisation to the provability of

such implications for reduced rings: i.e., rings in which only 0 is

nilpotent. Scott entailment relations are particulary suited for proving

those characterisations. From the mono-conclusion case one can

read off a syntactical proof that the theory of integral domains is

conservative for Horn clauses over the theory of reduced rings. This

allows to eliminate Krull's Lemma, the appropriate incarnation of Zorn's

Lemma, from many a "short and elegant'' proof in commutative algebra

that works by reduction modulo a generic prime ideal. With only

little effort, the approach above can be carried over from the case of

integral domains over reduced rings to the case of local rings over

rings: just replace ideals by filters, and "is zero'' by "is

invertible''. With hardly more effort one can also deal with occurrences

of mixed predicates in the antecedent. All proofs on the

meta-level are constructive and elementary. Within the given theories,

however, it is irrelevant whether one thinks provability in terms of

classical or intuitionistic logic: all formulas under consideration are

geometric implications. In competition with related results from

dynamical algebra, we eliminate also the condition that every integral

domain or local ring be non-trivial, and thus avoid to speak of

absurdity. This opens up the possibility to step down from classical

logic not only to intuitionistic but also to minimal logic.

- Johannes Stern.

A New Norm for Truth and the Modal Logics of Axiomatic Theories of Truth

Abstract: Due to a seminal result by Solovay it is well known that the modal

logic $GL$ is the modal logic of the natural provability predicate of $PA$

in $PA$. In other words $GL$ expresses exactly the modal properties of the

natural provability predicate of $PA$. At least in principle one could try

determining the modal logic of other sentential predicates of an

arithmetic theory or an extension thereof. In particular it seems

interesting to look for the modal logic of the truth predicate of

various axiomatic theories of truth. While this question is interesting

in its own right we shall focus on its philosophical relevance and argue

that such an investigation leads to a new norm for theories of

reflexive truth.

We argue for this this claim by discussing the recent arithmetic

completeness result by Czarniecki and Zdanowski. Czarnecki

and Zdanowski show that the normal modal logic $KDD_C$, which extends

the basic normal modal logic $K$ by the modal principles

$$\begin{align*}

(D)&&\Box\phi\rightarrow\Diamond\phi&&\\

(D_C)&&\Diamond\phi\rightarrow\Box\phi&&

\end{align*}$$

is the modal logic of the truth predicate of symmetric theories of

truth (although Czarnecki and Zdanowski do not mention this fact). A

theory of truth is called symmetric if and only if the truth predicate

commutes with all boolean connectives and is closed under the rules of

T-introduction and T-elimination. The ingenious strategy employed by

Czarnecki and Zdanowski in showing the arithmetic completeness of

$KDD_C$ with respect to symmetric theories of truth is to show that

$KDD_C$ is the maximal normal modal logic that can be consistently

extended by the relevant modal instances of the diagonal lemma. Since

$KDD_C$ is sound with respect to symmetric theories of truth it then

must be the modal logic of the truth predicate of symmetric theories of

truth.

The observation that $KDD_C$ is a maximal modal logic lead us to

proposing the following new norm for reflexive theories of truth:

The modal logic of the truth predicate of reflexive

theories of truth ought to be maximal (in the sense of Czarnecki and

Zdanowski).

We argue that this norm should replace the naive desiderata that all

T-biconditionals ought to be derivable, which, however, cannot be

satisfied in classical and consistent theories of truth. We then

illustrate and discuss the consequences of adopting such a norm and also

point to certain limitations.

- Elena Tatievskaya.

Symbol and Rule: Cassirer’s treatment of Kant’s Schematism

Abstract: Cassirer

(The Philosophy of Symbolic Forms (1923-1929)) regards cognition as

symbolizing that follows rules. Such rules are embodied in systems of

meaning invariants used to order objects and govern scientific as well

as pre-scientific cognition, language and art. Cassirer compares symbols

with Kant’s transcendental schemata that mediate between categories and

sensible appearances and uses the terms “schema” and “rule” as

interchangeable. Hence it may be asked what the rule-like function of

the schema consists in and whether this notion therefore could help to

clarify Cassirer’s concept of a symbol and of how rules of symbolizing

work.

I argue that transcendental schemata can be regarded as belonging to

the rules of understanding that allow of judging. Using Krausser’s

definition of the topological structure of the intuition of time

(„Kant’s Schematism of the Categories and the Problem of Pattern

Recognition“, Synthese 33, 1976, 175-192) which presupposes the

relations of simultaneity and following between different elements of a

sensibly intuited manifold I propose to represent principles of pure

understanding and so-called “schema-judgments” or “criteria” that

connect schemata with categories in such a way that the principles

define possible antecedents of the propositions that fix such criteria.

The consequents of such propositions involve the expression of the form

of the judgment that becomes possible due to the application of the

schema. Thus a transcendental schema works as a rule according to which

the category represented by the schema defines some logical property of

the judgment that concerns the apprehended object. Such a property could

be the quantity, quality, form or modality of the judgment.

If we take for example the categories of quantity and consider the

number as “Anzahl” of the elements of a sensibly given manifold of which

some property is predicated in a judgment the general principle of the

axioms of intuition that requires that all intuitions be extensive

quantities can be formulated in the form “Either an appearance differs

from other appearances simultaneous with it insofar as they in their

turn are not simultaneous with each other or some appearances of the

given manifold differ with respect to their simultaneity from the other

ones or the given manifold contains no appearances that are not

simultaneous with at least one of its elements”. Then the proposition

that represents the schema of e.g. the category of plurality is “If a

given manifold contains an appearance that is simultaneous with only

some of the other appearances and differs with respect to simultaneity

from the remaining ones a certain property can be predicated of some of

the appearances of the manifold”.

Cassirer’s symbol functions analogously to a schema representing a

category since it orders objects due to its ability to represent a

system of meaning invariants which in its turn determines the rule-like

role of the symbol. The cognition is not restricted to the formation of

judgments but involves the production of symbols of different kinds.

- Gregory Wheeler.

Beware of B

Abstract: No

epistemic logic should include the Brouwersche schema, (B), as (B) and

(K) yield a pair of known schemas (Chellas 1980) which I dub the BUM

schemas. BUMs are a disaster for epistemic logics in general, although

BUMs are not problematic for provability logics, which suggests that (B)

points out a sharp distinction between (regular) epistemic logics and

logics of provability. To mark the distinction, and to preserve

Brouwer’s good name, I propose epistemic logicians hereafter refer to

(B) as the Blog Schema. Finally, with the downfall of (B) joining the

ranks of (5) (Hintikka 1962) and (4) (Williamson 2000), hints of a new

case against regular systems of epistemic logic comes into view: (K)

does not play well with other modal schemas.

- Gunnar Wilken and Andreas Weiermann.

Goodstein sequences for prominent ordinals up to the ordinal of $\Pi^1_1-CA_0$

Abstract: We present a smooth approach to generate Goodstein

principles which are true but unprovable in theories of iterated

inductive definitions, $ID_n$, and eventually the theory $\Pi^1_1-CA_0$.

This work was published in the Annals of Pure and Applied Logic in 2013.

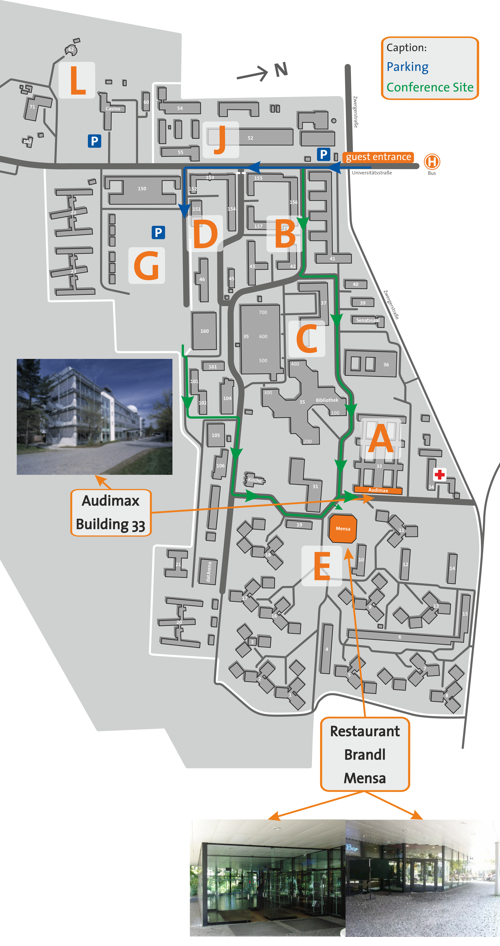

Map

|